The Cyberint Research Team work round the clock to unearth the latest threats to SMBs and enterprises. They are on top of the latest TTPs and monitor rising threat groups, malwares and trends.

Artificial Intelligence, often abbreviated to AI, refers to the development of computer systems capable of carrying out tasks and rendering decisions that traditionally demand human intelligence. This entails the creation of algorithms and models that empower machines to acquire knowledge from data, discern patterns, and adjust to unique information or scenarios.

AI involves instructing computers to emulate human thinking and learning processes. It grants machines the capacity to process and scrutinize substantial datasets, detect patterns or irregularities, and formulate predictions or choices based on this data. AI has a wide range of applications, spanning from image and speech recognition to natural language processing, robotics, and cybersecurity, among others.

In essence, AI endeavors to replicate human intelligence for the purpose of addressing intricate challenges, automating tasks and increasing efficiency and precision across diverse domains.

ChatGPT emerged in November 2022, swiftly becoming a remarkable AI tool renowned for generating human-like text responses based on prompts or conversational context. Its ability to comprehend and produce coherent and contextually relevant answers across a vast spectrum of inquiries and requests has made it extremely popular among businesses and individual users.

As demonstrated in Figure 1 (by SimilarWeb), ChatGPT is the fastest-growing service in the history of the Internet, gaining a significant user base in a short time. It has become increasingly popular among professionals and companies from a wide range of industries. People across all walks of life -professionals, students and school kids alike – are using ChatGPT to heighten their efficiency and productivity. They have integrated it into their workflows, leveraging its capabilities to augment their respective fields and areas of interest.

The increase in search queries associated with ChatGPT and other AI models appears to be linked to search queries that have malicious intent, as indicated by threat actors, as well as those from cybersecurity influencers (Figure 2).

rity. Like any technological advancement, AI can be harnessed for both beneficial and malicious purposes. Cybercriminals can utilize AI tools, originally designed to assist humanity, to perpetrate fraudulent activities, scams, and various cybercrimes. Artificial Intelligence introduces a range of risks within the realm of cybersecu

Head of the Canadian Centre for Cyber Security Sami Khoury stated on July 20 this year that his organization had observed the utilization of AI in various malicious activities, including crafting more targeted phishing emails, generating malicious code, and spreading misinformation and disinformation.

AI model theft presents a risk facilitated through network attacks, social engineering tactics, and vulnerability exploitation by threat actors, including state-sponsored agents, insider threats, and conventional hackers. Stolen AI models can be manipulated and repurposed to aid attackers in various malicious endeavors, amplifying the societal risks associated with artificial intelligence.

WormGPT is an AI module that builds on the GPT-=J language model, which was developed in 2021. This tool is presented as a Blackhat alternative to GPT models, specifically designed for malicious activities. It offers a wide range of features, including support for unlimited characters, the retention of chat history, and the ability to format code. WormGPT claims to have undergone training using a diverse set of data sources, with a particular emphasis on data related to malware. However, the specific datasets used during the training process remain undisclosed at the discretion of the tool’s creator.

Moreover, the creators have opened a dedicated site – https://wormgpt[.]co, which offers to sell the tool at different subscription plans.

Even though the site has been functioning and the model is being utilized by numerous users worldwide, the individuals behind the WormGPT project, who are associated with a well-known Russian hacking forum, have chosen to cease the project’s operations and discontinue further updates. As indicated in their farewell message, they stated that anyone could replicate the functionalities of WormGPT. This decision hints at the potential emergence of a new realm where scammers, developers, sellers, and buyers might find common ground in a legitimate business endeavor.

In addition to the WormGPT model, threat actors continually advance the development of more sophisticated AI models to meet their nefarious requirements. As shown in Figure 5, a threat actor responded in a thread, announcing their plans to create an Evil-GPT-Web3 model, with the intention of completing it by the end of August.

Fill in your business email to start.

It’s crucial to point out that in addition to WormGPT, there are several other offensive AI tools that have been developed and can be used for malicious purposes. Some examples include BurpGPT, PentestGPT, FraudGPT, and PassGPT.

Threat actors may leverage generative AI and extensive language models to elevate cyber attacks to unprecedented levels of speed and complexity. These tools empower attackers to discover innovative ways to exploit cloud complexities and capitalize on geopolitical tensions for sophisticated attacks. Moreover, they can fine-tune their ransomware and phishing tactics using the refinement of generative AI.

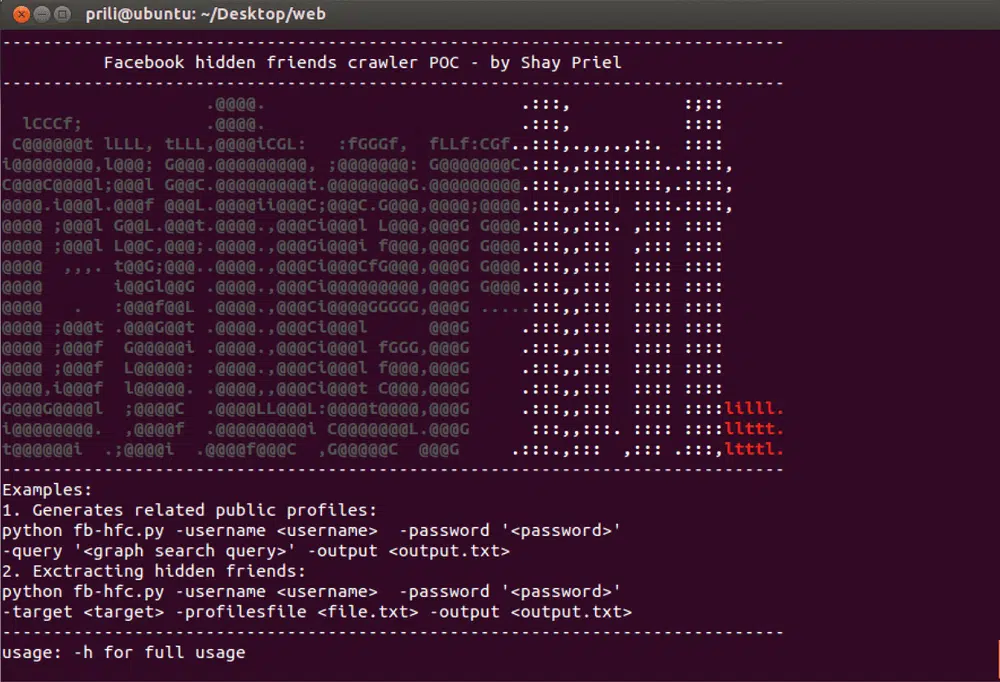

Threat actors frequently share information about new tools, techniques, and various cybersecurity topics, often stating that it’s for “educational purposes only” (Figure 6). In a well-known Russian hacking forum, an individual posting provides details about several AI-based tools. These tools could potentially aid penetration testers in identifying new vulnerabilities, examining network traffic, scrutinizing application behavior, and more. Conversely, the disseminated information empowers threat actors to employ these tools for their own malicious purposes.

AI, such as ChatGPT, possesses remarkable computational capabilities. Although AI systems like ChatGPT have safeguards in place to prevent users from generating malicious code, researchers have identified ingenious methods to bypass these safeguards and produce malware. For instance, a researcher discovered a loophole that enabled the creation of a nearly undetectable data-theft executable, exhibiting the sophistication typically associated with state-sponsored threat actors.

This could mark the beginning, with subsequent AI-powered tools potentially empowering individuals with basic programming skills to craft automated malware, resembling advanced malicious bots. These malicious bots operate autonomously, capable of data theft, network infiltration, and system attacks with minimal human intervention.

As evident in the example provided below (Figure 7), a threat actor uses the ChatGPT model to generate malicious code that could potentially be employed in various types of attacks. As previously mentioned, the actor attempts to conceal his personal malicious intentions by stating that the manual should not be utilized for malicious purposes.

With suitable footage, anyone can generate deepfake content using freely available apps. Free AI-powered tools can also produce remarkably realistic fake voices trained on mere seconds of audio.

Consequently, AI is now employed in virtual kidnapping scams and has potential for other forms of impersonation fraud. These may encompass fraudulent schemes, such as investment opportunities and giveaways, propagated through channels such as email and social media platforms.

In recent incidents, fraudsters employed AI voice generator technology to create the illusion that they had kidnapped children, and demanded substantial ransoms from concerned parents. In a case reported last month, scammers mimicked the voice of a 15-year-old girl from Arizona. They impersonated her when contacting her mother, threatening to harm “her” and demanding a $1 million ransom. Fortunately, the mother managed to verify the safety of her daughter. Nevertheless, this instance highlights the extent to which scammers can exploit AI technology.

Phone and cyber scams took a total of approximately $10 billion out of the pockets of Americans in 2022, according to the FBI Internet Crime Complaint Center.

AI poses a privacy challenge to both individuals and organizations due to the intricate nature of the algorithms employed in AI systems. As AI continues to advance, it can make decisions based on subtle data patterns that are often challenging for humans to discern. Consequently, individuals may remain unaware that their personal data is being utilized to make decisions that impact them.

While AI technology offers numerous potential advantages, it also presents several notable challenges. One of the primary concerns revolves around the possibility of AI being exploited to infringe on privacy. AI systems rely on extensive amounts of data, including personal information, and if this data falls into the wrong hands, it could have devastating impacts.

Despite the numerous benefits associated with working with ChatGPT, it also provides a new path for threat actors’ malicious intentions and offers them a valuable tool for targeting and attacking their victims. Cyberint’s analysis of the Argos malware log collection has revealed a substantial number – 259,887 – of compromised ChatGPT accounts since the beginning of 2023.

Both private and corporate ChatGPT accounts are being sold by threat actors in dark web forums and marketplaces, where they serve as active platforms for such transactions. The highest bidder can easily acquire these compromised accounts.

Given that all conversations with ChatGPT are saved, if a threat actor manages to compromise a ChatGPT account, they could gain access to the complete conversation history. This becomes a concern as many employees who use ChatGPT also share sensitive information while seeking assistance. Consequently, if a threat actor gains access to these compromised accounts, they can potentially obtain the company’s most sensitive information. This includes insights into employees, customers, secret projects, code development, and more.

Armed with harmful information extracted from the conversation history, the threat actor may then leak the company’s sensitive data and plan targeted attacks utilizing the acquired intelligence. They can use social engineering techniques by familiarizing themselves with the company and its future plans, impersonating an employee, and potentially deceiving other employees or external contacts into revealing confidential information or engaging in unauthorized actions.

Furthermore, threat actors could exploit vulnerabilities or identify entry points based on the technologies or packages discovered through their exploration of the company’s activities in ChatGPT. Threat actors may also gain visibility into code snippets, discussions, and other sensitive information shared in the ChatGPT account. This could encompass proprietary code, credentials, API keys, or any other confidential data relevant to the company’s projects.

There is also a risk that intellectual property theft, in the form of unique algorithms, ideas, and innovative solutions discussed or shared on ChatGPT, could be stolen and used for the threat actor’s own purposes or be sold to competitors.

Accelerated Learning GenAI is pivotal in diminishing the training time necessary for crafting malware code, ultimately leading to significantly enhanced operational efficiency. This reduction in training requirements empowers threat actors to streamline their efforts and expedite the development of malicious code, allowing them to adapt and evolve their tactics more swiftly in the ever-changing landscape of cybersecurity.

Streamlined Automation GenAI serves as a powerful tool for seamlessly connecting various tasks and effectively utilizing supplementary resources to achieve more advanced objectives. This streamlined automation not only enhances the efficiency of cyber operations but also enables threat actors to orchestrate complex and coordinated attacks with greater ease. By facilitating the integration of multiple components and resources, GenAI empowers cybercriminals to optimize their strategies, allowing for more sophisticated and impactful execution of their malicious activities.

Boosts Scalability – GenAI is proving to be a pivotal asset by rapidly generating valuable content that covers all stages of the kill chain. This comprehensive coverage spans from the initial reconnaissance phase, where threat actors gather crucial information about their targets, to the exploitation phase, where vulnerabilities are leveraged to infiltrate and compromise systems. GenAI’s content creation agility not only accelerates the progression of cyber operations but also allows malicious actors to adapt to evolving circumstances and tailor their attacks more effectively. This scalability ultimately equips threat actors with the versatility needed to pursue a wide range of targets and objectives, enhancing their overall operational impact.

Credibility and Diversity – GenAI has emerged as a critical tool capable of not only recognizing but also seamlessly integrating meticulously selected content from diverse sources. This capability significantly bolsters the credibility of the bait used in cyber operations. By amalgamating content from various reputable and relevant outlets, GenAI helps malicious actors to create convincing and persuasive lures, increasing the likelihood of successful engagements with potential victims. This dynamic approach to content curation enables threat actors to establish a stronger rapport with their targets and reinforces the authenticity of their malicious campaigns, ultimately augmenting their chances of achieving their nefarious objectives.

Precision – In the realm of Precision, the automation-driven Large Language Model (LLM) stands out as an invaluable asset, offering the unique capability to be finely tuned using data from both defenders and attackers. Cybersecurity professionals caqn use LLMs to optimize their performance according to the specific requirements of their security strategies. By amalgamating insights from defenders and attackers alike, these finely tuned LLMs can effectively adapt to evolving threats, fine-tuning their responses and strategies in real-time. This level of precision not only enhances the effectiveness of cyber defenses but also enables more strategic and proactive threat mitigation, ultimately contributing to a more resilient and secure digital landscape.

In the coming years, threat actors are poised to capitalize on advancements in AI, leveraging them to create a breed of intelligent malware that’s capable of adapting and learning from its surroundings. This next-generation malware is set to surpass the capabilities of human hackers, posing a substantial risk by exposing sensitive information, including mission-critical assets, and inflicting financial, operational, and reputational harm.

Its design is purpose-driven, indicating that it has a specific objective, such as the theft of sensitive information or the compromise and disruption of critical systems.

Intelligent malware can conduct its attacks autonomously, exhibiting self-evaluation and self-replication capabilities. This means it can assess its own performance, adapt its strategies accordingly, and employ the remote agents it generates to spread and assign tasks. Distinguishing between secure and unauthorized access will become increasingly challenging, with even previously well-guarded information potentially falling victim to compromise.

Traditional methodologies for malware detection and removal will swiftly lose their effectiveness, necessitating the deployment of AI-driven solutions to combat this evolving threat landscape. This shift will set off a race for supremacy in the realm of AI, as offensive and defensive AI technologies vie for dominance.

It is our presumption that governments with extensive resources, investments, and operations at their disposal will craft bespoke AI models tailored to their specific needs, particularly within the realm of cybersecurity. These nation-state entities will leverage these automated tools to carry out targeted attacks on a wide spectrum of organizations and individuals, aligning with their objectives and personal agendas.

Once this comes to fruition, it will mark a profound transformation in the realm of cybersecurity and our technological existence, ushering in an era of unprecedented change.

As AI becomes integral to systems like autonomous vehicles, manufacturing equipment, and medical devices, the potential risks to physical safety escalate. For instance, a cybersecurity breach in an AI-driven self-driving car could jeopardize the physical safety of passengers. Similarly, manipulations of datasets pertaining to construction equipment maintenance tools could lead to hazardous conditions at construction sites.

While AI is a powerful tool, it is susceptible to data manipulation since it relies on training data. If this data is tampered with or poisoned, AI-powered tools may produce unexpected or even malicious results. Attackers could poison a training dataset with malevolent data to influence the model’s outcomes. This vulnerability can be particularly detrimental to industries such as healthcare, automotive, and transportation.

Organizations of varying sizes and across diverse sectors are leveraging AI to bolster their cybersecurity efforts. For instance, entities worldwide, from banks to government bodies, employ AI for identity authentication, while industries such as finance and real estate utilize it to detect anomalies and mitigate fraud risks.

Here’s a deeper look at how AI contributes to cybersecurity:

Sophisticated malware can circumvent conventional cybersecurity measures through various evasion tactics, such as code and structure modifications. However, advanced antivirus software harnessing AI and ML (Machine Learning) can identify irregularities within a potential threat’s overall structure, programming logic, and data.

AI-powered threat detection tools are pivotal in safeguarding organizations by proactively identifying emerging threats and enhancing alerting and response capabilities. Furthermore, AI-based endpoint security solutions provide protection for laptops, smartphones, and servers in an organization.

By utilizing generative AI, cybersecurity professionals can transition from a reactive stance to a proactive one. Generative AI can be employed to develop predictive models capable of identifying new threats and mitigating risks.

These predictive models yield several advantages, including Accelerated threat detection, Time-saving benefits, Cost reduction, Enhanced incident response and Improved risk protection.

Phishing emails remain a substantial threat vector, with threat actors using phishing expeditions to steal sensitive data and financial assets. Moreover, phishing emails are becoming increasingly challenging to distinguish from legitimate correspondence.

AI enriches cybersecurity by bolstering phishing detection. Email filters leveraging AI can analyze text to flag suspicious email patterns and block various types of spam.

Bots pose a threat to networks and websites, potentially causing security, productivity, and revenue setbacks for organizations. These automated entities can seize control of accounts through stolen credentials, aiding cybercriminals in perpetrating fraud and scams.

Software equipped with machine learning-based models can scrutinize network traffic and data to discern bot behaviors, allowing cybersecurity experts to effectively counteract them. AI can also contribute to the creation of more robust CAPTCHA defenses against bots.

Cybersecurity tools employing artificial intelligence can reduce the number of false positives, improving threat detection accuracy. These tools can also be programmed to autonomously address low-probability threats, freeing up the security team’s time and resources.

Many small and medium-sized businesses may lack the resources to maintain a large in-house cybersecurity team to combat increasingly sophisticated threats around the clock. However, they can invest in AI-powered cybersecurity technology that offers continuous monitoring, enhances efficiency, and reduces costs while scaling with a company’s growth.

Furthermore, AI enhances staff efficiency as it operates tirelessly, maintaining consistent service quality throughout the day and minimizing the risk of human error. AI’s capacity to manage vast volumes of data surpasses that of a human security team.

Here are some guidelines for safeguarding against the potential risks associated with AI:

Regularly assess the reputation and security of any AI systems you utilize to avoid privacy and security issues. Organizations should periodically audit their systems to identify vulnerabilities and minimize AI-related risks. This auditing process can be enhanced by the expertise of cybersecurity and AI professionals who can perform tasks such as penetration testing, vulnerability assessments, and comprehensive system reviews.

Many individuals share sensitive information with AI without fully comprehending the associated privacy risks. For instance, employees at reputable organizations have been observed inputting confidential company data into ChatGPT. Even a medical professional submitted a patient’s name and medical condition to the chatbot when composing a letter, without realizing the security risks posed by ChatGPT. Such actions not only jeopardize security but also violate privacy regulations like HIPAA. While AI language models may not intentionally disclose information, conversations are recorded for quality control purposes and can be accessed by system maintenance teams. Therefore, it is advisable to refrain from sharing any personal information with AI platforms.

AI heavily relies on its training data to produce accurate outcomes. If this data is tampered with or compromised, AI can generate unexpected and potentially harmful results. To shield AI systems from data poisoning, organizations should invest in state-of-the-art encryption, access controls, and robust backup solutions. Networks should be fortified with firewalls, intrusion detection systems, and robust password protocols.

Adhere to industry best practices for software maintenance to mitigate AI-related risks. This includes regularly updating AI software, frameworks, operating systems, and applications with the latest patches and updates to minimize the likelihood of exploitation and malware attacks. Additionally, protect your systems with advanced antivirus technology designed to detect and halt sophisticated malicious threats. Consider investing in comprehensive network and application security measures to fortify your defenses further.

AI vulnerability management is a comprehensive approach to proactively identifying, assessing, prioritizing, and mitigating vulnerabilities in AI systems. It goes beyond traditional cybersecurity practices by considering the unique characteristics of AI, including the models, data, and integration points. By effectively managing vulnerabilities in AI systems, organizations can enhance their overall cybersecurity posture and reduce the risk of data breaches and leaks.

The landscape of AI risks is extensive and constantly evolving, necessitating proactive measures to equip your workforce with the knowledge and skills needed to navigate this intricate terrain. Collaborating with experts in both cybersecurity and AI is essential to providing comprehensive training tailored to the unique challenges posed by artificial intelligence such as AI-Enhanced Threats, AI-generated malware, AI-driven phishing attacks, etc.

We are on the verge of a new era of cybersecurity. While the wonders of AI will help us achieve new goals and reach new technological heights, it will also do the same for threat actors worldwide as well.

With these new advancements, we will find ourselves facing new malware types and TTPs for threat actors to add to their arsenal.

In addition, this technology might improve additional threat types and techniques such as fraud, phishing and social engineering campaigns.

AI is one of the most life-changing technologies introduced to the world in a long time, and like any other technology, it will serve both the good and the bad.

Cyberint’s product uses AI in several important ways—for example, allowing customers to receive an AI-generated summarize of complex raw intelligence items with a few clicks. Cyberint also uses machine learning to identify messages related to fraud among millions of communications collected from the deep and dark web.

Perhaps more importantly, the Cyberint platform provides multiple layers of defense against the threats that will advance and accelerate as adversaries leverage AI in their attacks.

As noted previously in this report, threat actors are already using prompt injection techniques to trick LLMs like ChatGPT into providing text that can be used in phishing emails. It is reasonable to assume that this will make phishing emails more convincing, as they will be free from the grammatical errors and spelling mistakes that often indicate an email is suspicious.

At the same time, because threat actors don’t need to spend time writing this content themselves, it’s also safe to assume that phishing attacks will be launched faster and more frequently. According to IBM, 41% of attacks they investigated began with phishing. This threat will continue to be a serious challenge for organizations across all industries and regions.

Cyberint provides several layers of defense against phishing attacks that impersonate your organization’s brand(s). The first layer is domain protection, which immediately identifies lookalike domains as soon as they are registered. While not all registered domains have websites hosted on them, the Cyberint platform allows you to monitor these domains in case a malicious site is deployed.

The second layer of defense against phishing comes from the detection of phishing sites, independent of the domain, subdomain, or subdirectories in use. In other words, Cyberint can identify phishing sites that illegally use your trademarks, such as brand names and logos, even if your organization’s name is nowhere to be found in the URL. Lastly, Cyberint’s proprietary Phishing Beacon technology alerts you to phishing sites the moment they are published.

Threat actors often copy/paste a target website’s source code (i.e. the HTML and CSS) and then make minor modifications to launch a phishing attack. This makes the site look as authentic as possible while also making the attack easier to launch. By adding an obfuscated script to sensitive pages on your website, you will receive an Alert the very instant a threat actor launches a phishing site that uses the same source code as your legitimate site.

It has also been noted that threat actors can trick AI tools into writing malicious code. There are already many types of malware in existence—banking trojans, keyloggers, remote access trojans, infostealers—and many of these malicious programs can be purchased on the dark web for a nominal fee. By leveraging AI, threat actors may be able to:

Cyberint, as the leading provider of impactful intelligence, collects more than 165,000 malware logs every month from the deep and dark web. If one of your organization’s assets—domains, IP addresses, brand names, etc.—appears in a malware log, you receive an Alert so you can respond to the threat before it leads to a costly incident. Though this is not a preventative measure, it’s part of a defense in depth strategy that helps you to identify these risks, should other solutions like EV and EDR fail to detect an infection.

Finally, threat actors may be able to co-opt LLMs to write exploit code for known and unknown vulnerabilities. The number of documented CVEs continues grow every year—there were over 20,000 CVEs discovered in 2022—making it very difficult to prioritize patch management efforts. While only a small number of these CVEs are ever exploited in the wild, we may see the share of CVEs that get exploited grow as adversaries trick AI into writing exploit POCs for high severity vulnerabilities.

Cyberint’s Attack Surface Monitoring module detects all of your organization’s external assets, such as domains, subdomains, and IP addresses. It then checks these assets to see what DNS and/or WHOIS records are associated with it, which ports are open, what software and services are running, and other potential risk factors.

If there are known CVEs associated with a specific version of a specific technology running on one of your external assets, you will receive an alert. By using Cyberint’s CVE Intelligence module, you can see a wealth of information regarding that specific CVE, including whether it’s been exploited in the wild by bad actors, whether there is a POC exploit available, whether it’s been mentioned on code repository sites like Github, whether it’s been mentioned in deep and dark web forums, and so on.

While the challenge of software vulnerabilities is not going anywhere, receiving real-time alerts regarding vulnerable technologies running on your Internet-facing assets plus intelligence on the risk that those specific CVEs pose for you goes a long way towards keeping your organization secure.

©1994–2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright | Privacy Policy | Cookie Settings | Get the Latest News

Fill in your business email to start