Cyber Threat Intelligence | OSINT | Multilingual

61% of organizations have seen deepfake incidents increase in the past year – with 75% of these attacks impersonating the CEO or another C-suite executive, according to a recent report by Deep Instinct. Moreover, 97% are concerned they will suffer a security incident as a result of adversarial AI.

DEFINITION:

Deepfakes, generated by advanced AI technology, encompass audio, video, or images crafted with malicious intent to fabricate false scenarios. Threat actors typically disseminate these deceptive materials across social media platforms to manipulate their audience.

Notably, hybrid and remote work environments make employees significantly more susceptible to deepfake social engineering attacks. Reduced in-person interaction means employees may be less likely to consult with colleagues or IT departments about suspicious communications. Additionally, remote workers’ potential reliance on personal phones for work communications makes them prime targets for social engineering attacks.

Just a few days before Slovakia’s pivotal election in September 2023, suspicious audio recordings spread on Meta platforms. In the first one, the leading candidate seemingly boasted about rigging the election. In the other, he proposed to double the cost of beer. Both went viral, leading to his defeat by a pro-Moscow opponent. It was later concluded by the fact-checking department of AFP that the recordings were maliciously created by weaponizing AI deepfake technology.

In 2024, elections will be held in the UK, France, the US, and other nations. These elections are likely to face more disruption from advanced deepfake materials than ever before, while both media and governments have limited resources and authority to combat misinformation.

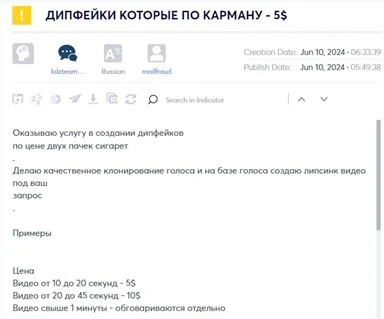

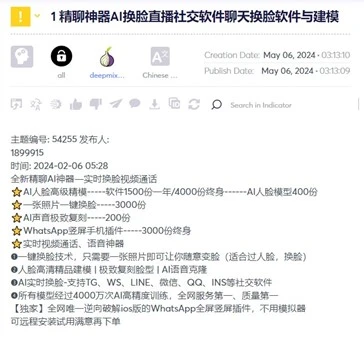

While sophisticated phishing, smishing and vishing attacks have been on the rise in recent years, the emergence of deepfake AI technologies poses higher financial risks for businesses.

In September 2023, threat actors impersonated the CFO of a Hong Kong-based multinational company using deepfake technology, deceiving a finance worker into transferring $25 million during a deceptive video conference call.

In another significant deepfake phishing attack, a bank manager in the UAE fell victim to the threat actor’s scam. The hackers leveraged AI voice cloning to impersonate a bank director and lure the bank manager into transferring $35 million.

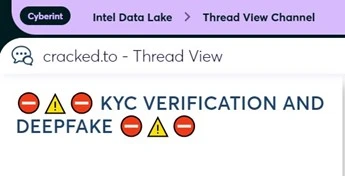

Cyberint observation indicates both financial institutions and financial employees in non-financial institutions are increasingly vulnerable to deepfake attacks. Financial institutions face heightened risk due to the potential for deepfake technology to bypass KYC (Know-Your-Customer) verification processes. The KYC check is the mandatory process of identifying and verifying the client’s identity when opening an account and periodically over time. In other words, banks must ensure that their clients are genuinely who they claim to be.

By bypassing the KYC verification threat actors can initiate fraudulent activities. Meanwhile, financial employees, regardless of their institution, are prime targets for deepfake attacks designed to deceive them into authorizing large-scale transfers that benefit threat actors. This dual vulnerability underscores the critical need for enhanced security measures and training to combat the evolving threat of deepfakes.

By impersonating executives and creating fabricated personas through manipulated videos and AI-generated content, these attacks can severely damage a company’s reputation. The dissemination of misinformation via deepfakes can also lead to financial repercussions, eroding trust among investors, customers, and stakeholders and harming the company’s brand and market standing. Moreover, fraudulent customer interactions facilitated by deepfake technology can expose corporations to legal and financial risks. To counter these threats effectively, organizations must implement robust cybersecurity measures and proactive strategies to defend themselves and protect their integrity and trustworthiness.

©1994–2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright | Privacy Policy | Cookie Settings | Get the Latest News

Fill in your business email to start